What is the role of AI in music?

Music, Computation ·

AI technologies has proven prolific as a means to perform tasks at a scale. Machine learning is finding its way into every line of business and transforming many industries. But among musicians, AI has not enjoyed as warm a reception as it did in other fields. As a classically trained pianist, I feel that it is unclear how a musician can benefit from AI. In setting up this page, I am to explore the role of AI in music composition and production through a series of projects.

Objective

To begin with, I would like to make clear distinction of the two main objectives of music generation. A system that generates a complete music piece assumes the role of a composer. Amper and Aiva strive for the autonomous creation of original music for content creators. In contrast, a system that generates music fragments assists with composition. The earliest record of such a system dates back to the 18th century – Musikalisches Würfelspiel uses dice to generate arrangements for precomposed music segments. Digital Audio Workstation plugins such as iZotope Ozone, Jamahook are present day examples of an assistive tool for musicians and producers.

The nature of the content to be generated are characterised by texture, usage, mode of generation, and musical style. Texture describes the overall quality of sound in a piece of music. The intangible qualities tempo, melody, harmony, rhythm, and timbre all have an impact on music texture. In musical terms, the different types of texture are described by monophony, homophony, polyphony, and heterophony. The destination for the generated content can be a sound system, for which the content must be an audio file; a software instrument, for which the content should be in the form of a MIDI1 file; a musician, for whom the desired formats is some kind of music documentation (music score, guitar tab etc.) The mode of generation may or may not involve human interaction. The style of the generated music largely depends on the choice of training dataset.

We will explore these aspects of music generation by creating and implementing a series of AI music generation tools. The focus is on finding ways for musicians to benefit from AI. Accordingly, a good starting point would be an AI that assists with composition.

Projects

AI Bassist

AI Bassist is a symbolic model that generates monophonic bass melodies in the style of minimal house and techno music. It is built into a Yamaha DX7 emulator in a web application. The target destination is a software synthesiser which reads MIDI files. Human interaction is limited though possible during content generation via a SoftMax temperature parameter2. However, it is not reflected in the user interface (UI) in the current release.

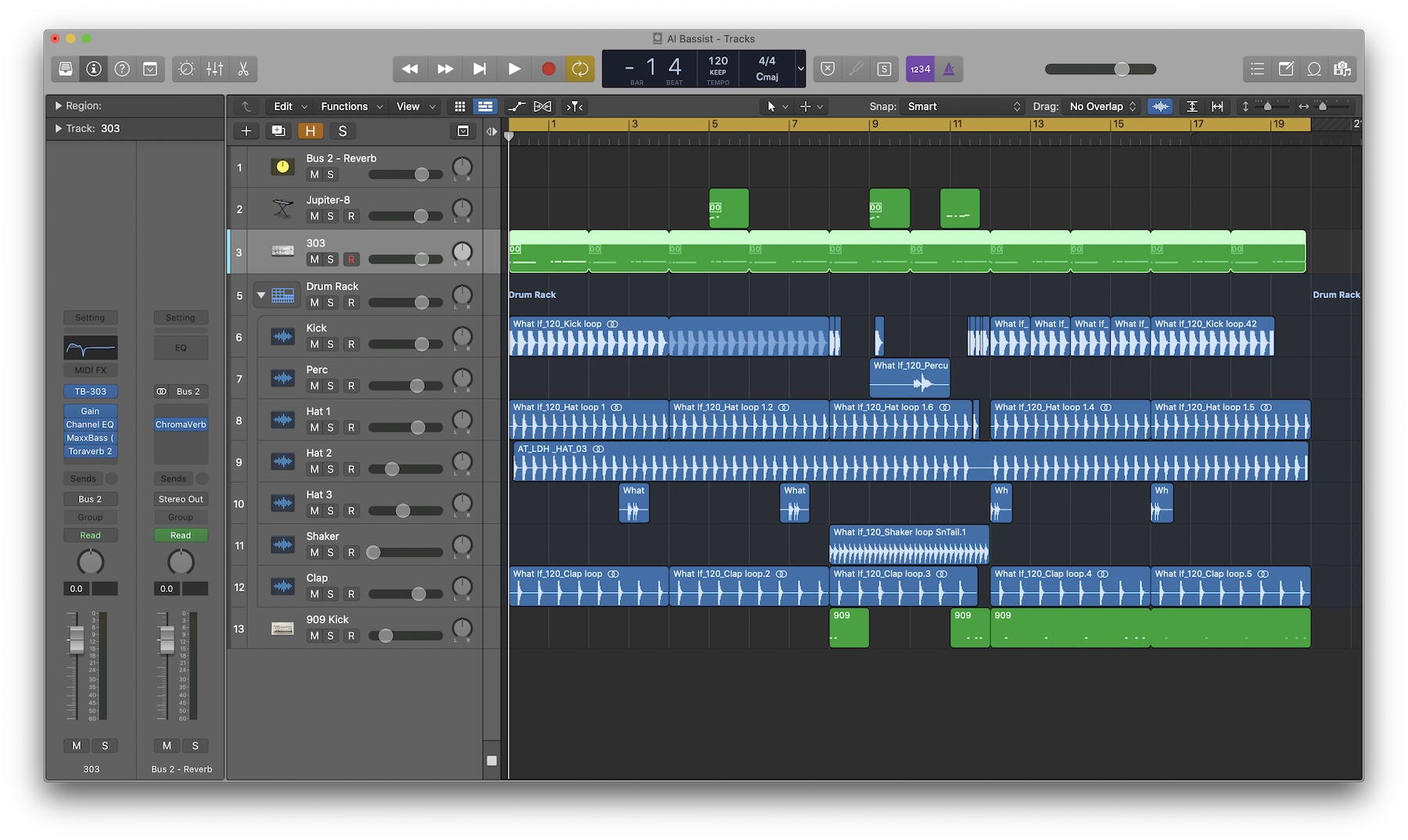

Access to music instruments and knowledge in music theory are the main obstacles for novice producers. Exploring creativity and articulating artistic expressions can be especially challenging when all audio/visual feedback comes from within a digital audio workstation (DAW). AI Bassist puts a producer in the audience’s perspective by generating 2-bar melodies in MIDI format, which is readily processed by any software instrument. The producer can focus on sound design and arrangement but also has the option to modify the melody. The demo track below is a collaboration between myself and AI Bassist.

Two generated MIDI note sequences were processed by Roland TB-303 and Jupiter-8. The 303 gave sets the acidic scene and the arpeggiated Jupiter-8 adds occasional polyphony. My contribution was on the drum track, audio FX and arrangement.

Personally, I feel that AI Bassist can benefit a subset of musicians. Its simplicity and flexibility almost overshadows its AI nature. I believe AI tools with similar intent can help build a good reputation for AI in the music industry. In the following weeks, I will tweak the model to optimise content generation. In the meantime, feel free to share your experience via the contact form.

More to follow!

-

MIDI (Musical Instrument Digital Interface) is an industry standard music technology protocol that connects digital musical instruments, computers, tablets, and smartphones from many different companies. ↩

-

The SoftMax temperature may be considered as a parameter that affects the confidence of the model. ↩